برای نصب Oracle RAC به منظور استفاده در محیط آموزشی و یا اجرای بعضی از تستها، معمولا از Oracle Virtual Box و یا VMware workstation استفاده می کنیم.

برای این کار باید به تعداد نودهای کلاستر، ماشین مجازی ایجاد کرده و بر روی هر کدام از این ماشینها، سیستم عاملی را نصب کنیم و در نهایت اقداماتی را در هر کدام از این سیستم عاملها انجام دهیم تا شرایط برای نصب کلاستر فراهم شود. پیکربندی کلاستر در محیط VM نسبتا زمانبر است و شاید کمی پیچیده هم باشد.

البته در این زمینه، VM تنها گزینه ما نیست و استفاده از Docker می تواند به عنوان انتخابی دیگر، ایرادات ذکر شده را از بین ببرد.

اوراکل از نسخه 12c امکان اجرای Oracle RAC در داکر را برای محیط تست و develop فراهم کرده و در نسخه 21c، از اجرای Oracle RAC در محیط docker آن هم به صورت عملیاتی پشتیبانی می کند.

اجرای Oracle RAC در داکر، می تواند با استفاده از یک یا چند هاست انجام شود که در این متن صرفا از یک هاست استفاده خواهیم کرد و راه اندازی آن در چند هاست را به زمانی دیگر موکول می کنیم.

سیستم عاملی که از آن استفاده کرده ایم، Oracle Linux نسخه 7 می باشد و قرار است Oracle RAC نسخه 21c را در این محیط اجرا کنیم. این کار با کمک سه container انجام خواهد شد که یکی از آنها برای DNS server و دو container دیگر هم هر کدام نودهای کلاستر را تشکیل خواهند داد.

در ابتدا مراحل پیکربندی را مرور می کنیم:

1.نصب و اجرای داکر

2.آماده سازی داکر هاست

3.تنظیم Docker Network

4.نصب git و کلون Oracle Repository

5.ایجاد container برای DNS

6.ایجاد container برای راه اندازی نود اول کلاستر

7.اضافه کردن نود دوم کلاستر

نصب و اجرای داکر

با توجه به آنکه نحوه “نصب داکر در اوراکل لینوکس” قبلا توضیح داده شده از تکرار آن در این متن می پرهیزیم و صرفا با دستور زیر، وضعیت سرویس docker را بررسی می کنیم:

[root@OEL7 ~]# systemctl status docker

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2022-10-05 13:43:33 +0330; 8ms ago

Docs: https://docs.docker.com

Main PID: 44314 (dockerd)

Tasks: 30

Memory: 84.1M

CGroup: /system.slice/docker.service

└─44314 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --cp...

آماده سازی داکر هاست

برای آماده سازی docker host، مراحل زیر را انجام خواهیم داد.

1.پارامترهای زیر را در فایل sysctl.conf تنظیم می کنیم:

[root@OEL7 ~]# vi /etc/sysctl.conf fs.aio-max-nr=1048576 fs.file-max = 6815744 net.core.rmem_max = 4194304 net.core.rmem_default = 262144 net.core.wmem_max = 1048576 net.core.wmem_default = 262144 net.core.rmem_default = 262144

و با اجرای دستور sysctl -p این تغییرات را اعمال می کنیم.

2.پارامتر cpu-rt-runtime=950000– را به صورت زیر به فایل docker.service اضافه می کنیم:

[root@OEL7 ~]# vi /usr/lib/systemd/system/docker.service ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --cpu-rt-runtime=950000

بعد از اعمال این تغییر، باید سرویس داکر را restart کنیم:

[root@OEL7 ~]# systemctl daemon-reload [root@OEL7 ~]# systemctl stop docker [root@OEL7 ~]# systemctl restart docker

3.فایروال را stop و غیرفعال می کنیم:

[root@OEL7 ~]# systemctl stop firewalld.service [root@OEL7 ~]# systemctl disable firewalld.service

ایجاد Docker Network

همانطور که می دانید، برای راه اندازی کلاستر حداقل به دو کارت شبکه public و private نیاز خواهیم داشت، در این مرحله، این دو کارت شبکه را ایجاد می کنیم:

[root@OEL7 ~]# docker network create --driver=bridge --subnet=10.0.20.0/24 rac_pub_nw1 710ae5777ba3cb207b6b0b49ec37e89a0554347bae054d5017af5fa3a35a03cb [root@OEL7 ~]# docker network create --driver=bridge --subnet=192.168.10.0/24 rac_priv_nw1 f3824262f932161851fde89da802c29a9323f4ff76c1bb8c88fe42b6546c7880

نصب git و کلون Oracle Repository

در قدم بعدی، git را نصب می کنیم:

[root@OEL7 ~]# yum install -y git

بعد از نصب gitء، Oracle repository را در مسیر OracleRepoGIT/ کلون می کنیم:

[root@OEL7 ~]# mkdir /OracleRepoGIT [root@OEL7 ~]# cd /OracleRepoGIT [root@OEL7 OracleRepoGIT]# git clone https://github.com/oracle/docker-images.git Cloning into 'docker-images'... remote: Enumerating objects: 16096, done. remote: Counting objects: 100% (15/15), done. remote: Compressing objects: 100% (15/15), done. remote: Total 16096 (delta 3), reused 1 (delta 0), pack-reused 16081 Receiving objects: 100% (16096/16096), 10.47 MiB | 3.86 MiB/s, done. Resolving deltas: 100% (9512/9512), done.

با اجرای این دستور، فایلها و دایرکتوری های زیر ایجاد خواهند شد:

[root@OEL7 OracleRepoGIT]# ls -l drwxr-xr-x 32 root root 4096 Oct 5 13:48 docker-images [root@OEL7 docker-images]# ll drwxr-xr-x 6 root root 110 Oct 5 13:48 Archive -rw-r--r-- 1 root root 3359 Oct 5 13:48 CODE_OF_CONDUCT.md -rw-r--r-- 1 root root 1338 Oct 5 13:48 CODEOWNERS drwxr-xr-x 3 root root 53 Oct 5 13:48 Contrib -rw-r--r-- 1 root root 9695 Oct 5 13:48 CONTRIBUTING.md drwxr-xr-x 3 root root 16 Oct 5 13:48 GraalVM -rw-r--r-- 1 root root 1844 Oct 5 13:48 LICENSE.txt drwxr-xr-x 3 root root 33 Oct 5 13:48 NoSQL drwxr-xr-x 4 root root 76 Oct 5 13:48 OracleAccessManagement drwxr-xr-x 3 root root 59 Oct 5 13:48 OracleBI drwxr-xr-x 4 root root 59 Oct 5 13:48 OracleCloudInfrastructure drwxr-xr-x 2 root root 23 Oct 5 13:48 OracleCoherence drwxr-xr-x 4 root root 56 Oct 5 13:48 OracleDatabase drwxr-xr-x 4 root root 57 Oct 5 13:48 OracleEssbase drwxr-xr-x 4 root root 57 Oct 5 13:48 OracleFMWInfrastructure drwxr-xr-x 4 root root 49 Oct 5 13:48 OracleGoldenGate drwxr-xr-x 4 root root 74 Oct 5 13:48 OracleHTTPServer drwxr-xr-x 5 root root 91 Oct 5 13:48 OracleIdentityGovernance drwxr-xr-x 4 root root 63 Oct 5 13:48 OracleInstantClient drwxr-xr-x 6 root root 62 Oct 5 13:48 OracleJava drwxr-xr-x 4 root root 63 Oct 5 13:48 OracleLinuxDevelopers drwxr-xr-x 3 root root 42 Oct 5 13:48 OracleManagementAgent drwxr-xr-x 3 root root 33 Oct 5 13:48 OracleOpenJDK drwxr-xr-x 3 root root 77 Oct 5 13:48 OracleRestDataServices drwxr-xr-x 4 root root 91 Oct 5 13:48 OracleSOASuite drwxr-xr-x 5 root root 109 Oct 5 13:48 OracleUnifiedDirectory drwxr-xr-x 4 root root 94 Oct 5 13:48 OracleUnifiedDirectorySM drwxr-xr-x 3 root root 22 Oct 5 13:48 OracleVeridata drwxr-xr-x 3 root root 42 Oct 5 13:48 OracleWebCenterContent drwxr-xr-x 3 root root 42 Oct 5 13:48 OracleWebCenterPortal drwxr-xr-x 3 root root 59 Oct 5 13:48 OracleWebCenterSites drwxr-xr-x 4 root root 74 Oct 5 13:48 OracleWebLogic -rw-r--r-- 1 root root 3127 Oct 5 13:48 README.md

ایجاد container برای DNS

همانطور که می دانید، برای داشتن scan ipها، بهتر است از DNS سرور استفاده کنیم، با انجام روال زیر، ابتدا image و سپس containerای را برای DNS ایجاد خواهیم کرد.

در ابتدا دو فایل zonefile و reversezonefile موجود در مسیر OracleRepoGIT را به شکل زیر اصلاح می کنیم:

[root@OEL7 OracleRepoGIT]# cd /OracleRepoGIT/docker-images/OracleDatabase/RAC/OracleDNSServer/dockerfiles/latest [root@OEL7 latest]# vi zonefile

$TTL 86400

@ IN SOA ###DOMAIN_NAME###. root (

2014090401 ; serial

3600 ; refresh

1800 ; retry

604800 ; expire

86400 ) ; minimum

; Name server's

IN NS ###DOMAIN_NAME###.

; Name server hostname to IP resolve.

IN A ###RAC_DNS_SERVER_IP###

; Hosts in this Domain

###HOSTNAME### IN A ###RAC_DNS_SERVER_IP###

###RAC_NODE_NAME_PREFIX###1 IN A ###RAC_PUBLIC_SUBNET###.151

###RAC_NODE_NAME_PREFIX###2 IN A ###RAC_PUBLIC_SUBNET###.152

###RAC_NODE_NAME_PREFIX###3 IN A ###RAC_PUBLIC_SUBNET###.153

###RAC_NODE_NAME_PREFIX###4 IN A ###RAC_PUBLIC_SUBNET###.154

###RAC_NODE_NAME_PREFIX###1-vip IN A ###RAC_PUBLIC_SUBNET###.161

###RAC_NODE_NAME_PREFIX###2-vip IN A ###RAC_PUBLIC_SUBNET###.162

###RAC_NODE_NAME_PREFIX###3-vip IN A ###RAC_PUBLIC_SUBNET###.163

###RAC_NODE_NAME_PREFIX###4-vip IN A ###RAC_PUBLIC_SUBNET###.164

###RAC_NODE_NAME_PREFIX###-scan IN A ###RAC_PUBLIC_SUBNET###.171

###RAC_NODE_NAME_PREFIX###-scan IN A ###RAC_PUBLIC_SUBNET###.172

###RAC_NODE_NAME_PREFIX###-scan IN A ###RAC_PUBLIC_SUBNET###.173

###RAC_NODE_NAME_PREFIX###-gns1 IN A ###RAC_PUBLIC_SUBNET###.175

###RAC_NODE_NAME_PREFIX###-gns2 IN A ###RAC_PUBLIC_SUBNET###.176

[root@OEL7 latest]# mv reversezonefile reversezonefile-old [root@OEL7 latest]# vi reversezonefile

$TTL 86400

@ IN SOA ###DOMAIN_NAME###. root.###DOMAIN_NAME###. (

2014090402 ; serial

3600 ; refresh

1800 ; retry

604800 ; expire

86400 ) ; minimum

; Name server's

###HOSTNAME_IP_LAST_DIGITS### IN NS ###DOMAIN_NAME###.

; Name server hostname to IP resolve.

IN PTR ###HOSTNAME###.###DOMAIN_NAME###.

; Second RAC Cluster on Same Subnet on Docker

151 IN PTR ###RAC_NODE_NAME_PREFIX###1.###DOMAIN_NAME###.

152 IN PTR ###RAC_NODE_NAME_PREFIX###2.###DOMAIN_NAME###.

153 IN PTR ###RAC_NODE_NAME_PREFIX###3.###DOMAIN_NAME###.

154 IN PTR ###RAC_NODE_NAME_PREFIX###4.###DOMAIN_NAME###.

161 IN PTR ###RAC_NODE_NAME_PREFIX###1-vip.###DOMAIN_NAME###.

162 IN PTR ###RAC_NODE_NAME_PREFIX###2-vip.###DOMAIN_NAME###.

163 IN PTR ###RAC_NODE_NAME_PREFIX###3-vip.###DOMAIN_NAME###.

164 IN PTR ###RAC_NODE_NAME_PREFIX###4-vip.###DOMAIN_NAME###.

171 IN PTR ###RAC_NODE_NAME_PREFIX###-scan.###DOMAIN_NAME###.

172 IN PTR ###RAC_NODE_NAME_PREFIX###-scan.###DOMAIN_NAME###.

173 IN PTR ###RAC_NODE_NAME_PREFIX###-scan.###DOMAIN_NAME###.

175 IN PTR ###RAC_NODE_NAME_PREFIX###-gns1.###DOMAIN_NAME###.

176 IN PTR ###RAC_NODE_NAME_PREFIX###-gns2.###DOMAIN_NAME###.

با اجرای دستور زیر، imageای را برای اجرای Container DNS ایجاد خواهیم کرد:

[root@OEL7 latest]# cd /OracleRepoGIT/docker-images/OracleDatabase/RAC/OracleDNSServer/dockerfiles [root@OEL7 dockerfiles]# ./buildContainerImage.sh -v latest

==========================

DOCKER info:

Client:

Debug Mode: false

Server:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 19.03.11-ol

Storage Driver: overlay2

Backing Filesystem: xfs

Supports d_type: true

Native Overlay Diff: false

Logging Driver: json-file

Cgroup Driver: cgroupfs

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

Swarm: inactive

Runtimes: runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 7eba5930496d9bbe375fdf71603e610ad737d2b2

runc version: 52de29d

init version: fec3683

Security Options:

seccomp

Profile: default

Kernel Version: 4.14.35-1818.3.3.el7uek.x86_64

Operating System: Oracle Linux Server 7.6

OSType: linux

Architecture: x86_64

CPUs: 56

Total Memory: 755.5GiB

Name: OEL7

ID: LE4E:FM37:S2RL:AUUF:CNFH:KZHE:FYT4:L6P2:EGYT:JF7S:6JWE:QAGZ

Docker Root Dir: /var/lib/docker

Debug Mode: false

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

Loaded plugins: ovl

Cleaning repos: ol7_latest

Removing intermediate container be8a4c33ec0e

---> 9ea5cc12a920

Step 9/11 : USER orcladmin

---> Running in fd4fd39557aa

Removing intermediate container fd4fd39557aa

---> 3ff83fb548b8

Step 10/11 : WORKDIR /home/orcladmin

---> Running in f3719e4b73f6

Removing intermediate container f3719e4b73f6

---> 9231bfa5d4d8

Step 11/11 : CMD exec $SCRIPT_DIR/$RUN_FILE

---> Running in 8ee8f356b32a

Removing intermediate container 8ee8f356b32a

---> e8076b822446

Successfully built e8076b822446

Successfully tagged oracle/rac-dnsserver:latest

Oracle Database Docker Image for Real Application Clusters (RAC) version latest is ready to be extended:

--> oracle/rac-dnsserver:latest

Build completed in 609 seconds.

Imageای با نام rac-dnsserver ایجاد شده است:

[root@OEL7 latest]# docker image ls REPOSITORY TAG IMAGE ID CREATED SIZE oracle/rac-dnsserver latest e8076b822446 5 hours ago 285MB oraclelinux 7-slim 60807cf6683b 12 days ago 135MB

از طریق این image، کانتینر racnode-dns را ایجاد می کنیم:

[root@OEL7 dockerfiles]# docker run -d --name racnode-dns \ > --hostname racnode-dns \ > --dns-search="example.com" \ > --cap-add=SYS_ADMIN \ > --network rac_pub_nw1 \ > --ip 10.0.20.2 \ > --sysctl net.ipv6.conf.all.disable_ipv6=1 \ > --env SETUP_DNS_CONFIG_FILES="setup_true" \ > --env DOMAIN_NAME="example.com" \ > --env RAC_NODE_NAME_PREFIX="racnode" \ > oracle/rac-dnsserver:latest

c2374d70d683d211e70a61bdf835b8e9eecb8b72cd0c789c0129ebc503f8315b

با دستور زیر محتویات log مربوط به این container را مشاهده خواهید کرد:

[root@OEL7 dockerfiles]# docker logs -f racnode-dns

10-05-2022 10:30:41 UTC : : Creating /tmp/orod.log 10-05-2022 10:30:41 UTC : : HOSTNAME is set to racnode-dns 10-05-2022 10:30:41 UTC : : RAC_PUBLIC_SUBNET is set to 10.0.20 10-05-2022 10:30:41 UTC : : HOSTNAME_IP_LAST_DIGITS is set to 2 10-05-2022 10:30:41 UTC : : RAC_DNS_SERVER_IP is set to 10.0.20.2 10-05-2022 10:30:41 UTC : : RAC_PUBLIC_REVERSE_IP set to 20.0.10 10-05-2022 10:30:41 UTC : : Creating Directories 10-05-2022 10:30:41 UTC : : Copying files to destination dir 10-05-2022 10:30:41 UTC : : Setting up Zone file 10-05-2022 10:30:41 UTC : : Setting up reverse Zone file 10-05-2022 10:30:41 UTC : : Setting ip named configuration file 10-05-2022 10:30:41 UTC : : Setting up Resolve.conf file 10-05-2022 10:30:41 UTC : : Starting DNS Server 10-05-2022 10:30:41 UTC : : Checking DNS Server Server: 10.0.20.2 Address: 10.0.20.2#53 Name: racnode-dns.example.com Address: 10.0.20.2 10-05-2022 10:30:41 UTC : : DNS Server started sucessfully 10-05-2022 10:30:41 UTC : : ################################################ 10-05-2022 10:30:41 UTC : : DNS Server IS READY TO USE! 10-05-2022 10:30:41 UTC : : ################################################ 10-05-2022 10:30:41 UTC : : DNS Server Started Successfully 10-05-2022 10:30:41 UTC : : Setting up reverse Zone file 10-05-2022 10:30:41 UTC : : Setting ip named configuration file 10-05-2022 10:30:41 UTC : : Setting up Resolve.conf file 10-05-2022 10:30:41 UTC : : Starting DNS Server 10-05-2022 10:30:41 UTC : : Checking DNS Server 10-05-2022 10:30:41 UTC : : DNS Server started sucessfully 10-05-2022 10:30:41 UTC : : ################################################ 10-05-2022 10:30:41 UTC : : DNS Server IS READY TO USE! 10-05-2022 10:30:41 UTC : : ################################################ 10-05-2022 10:30:41 UTC : : DNS Server Started Successfully

با دستور زیر اطلاعات بیشتری از این container مشاهده می کنید:

[root@OEL7 ~]# docker container ls CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES c2374d70d683 oracle/rac-dnsserver:latest "/bin/sh -c 'exec $S…" 5 hours ago Up 5 hours racnode-dns

برای آنکه ببینیم DNS به خوبی عمل می کند، به container وصل شده و از دستور nslookup کمک می گیریم:

[root@OEL7 ~]# docker exec -it racnode-dns /bin/bash [orcladmin@racnode-dns ~]$ nslookup racnode1 Server: 10.0.20.2 Address: 10.0.20.2#53 Name: racnode1.example.com Address: 10.0.20.151 [orcladmin@racnode-dns ~]$ nslookup racnode2 Server: 10.0.20.2 Address: 10.0.20.2#53 Name: racnode2.example.com Address: 10.0.20.152 [orcladmin@racnode-dns ~]$ nslookup 10.0.20.152 152.20.0.10.in-addr.arpa name = racnode2.example.com.

همچنین برای کلاستری که قرار است نصب شود، سه scan ip در نظر گرفته شده است:

[orcladmin@racnode-dns ~]$ nslookup racnode-scan.example.com. Server: 10.0.20.2 Address: 10.0.20.2#53 Name: racnode-scan.example.com Address: 10.0.20.173 Name: racnode-scan.example.com Address: 10.0.20.172 Name: racnode-scan.example.com Address: 10.0.20.171 [orcladmin@racnode-dns ~]$ nslookup 10.0.20.171 171.20.0.10.in-addr.arpa name = racnode-scan.example.com.

ایجاد container برای راه اندازی نود اول کلاستر

در این مرحله قصد داریم docker image مربوط به Oracle RAC را ایجاد کنیم برای این کار، فایلهای نصب grid و database را در مسیر زیر کپی می کنیم:

[root@OEL7 dockerfiles]# cd /OracleRepoGIT/docker-images/OracleDatabase/RAC/OracleRealApplicationClusters/dockerfiles/21.3.0/ [root@OEL7 21.3.0]# cp /source/LINUX.X64_213000_* .

بعد از انجام کپی، با اجرای دستور زیر، image مربوط به کلاستر را ایجاد می کنیم:

[root@OEL7 ~]# cd /OracleRepoGIT/docker-images/OracleDatabase/RAC/OracleRealApplicationClusters/dockerfiles [root@OEL7 dockerfiles]# ./buildContainerImage.sh -v 21.3.0

Checking if required packages are present and valid...

LINUX.X64_213000_db_home.zip: OK

LINUX.X64_213000_grid_home.zip: OK

==========================

DOCKER info:

Client:

Debug Mode: false

Server:

Containers: 1

Running: 1

Paused: 0

Stopped: 0

Images: 25

Server Version: 19.03.11-ol

Storage Driver: overlay2

Backing Filesystem: xfs

Supports d_type: true

Native Overlay Diff: false

Logging Driver: json-file

Cgroup Driver: cgroupfs

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

Swarm: inactive

Runtimes: runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 7eba5930496d9bbe375fdf71603e610ad737d2b2

runc version: 52de29d

init version: fec3683

Security Options:

seccomp

Profile: default

Kernel Version: 4.14.35-1818.3.3.el7uek.x86_64

Operating System: Oracle Linux Server 7.6

OSType: linux

Architecture: x86_64

CPUs: 56

Total Memory: 755.5GiB

Name: OEL7

ID: LE4E:FM37:S2RL:AUUF:CNFH:KZHE:FYT4:L6P2:EGYT:JF7S:6JWE:QAGZ

Docker Root Dir: /var/lib/docker

Debug Mode: false

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

Registries:

==========================

Building image 'oracle/database-rac:21.3.0' ...

Sending build context to Docker daemon 5.532GB

Step 1/26 : ARG BASE_OL_IMAGE=oraclelinux:7-slim

Step 2/26 : FROM $BASE_OL_IMAGE AS base

---> 60807cf6683b

Step 3/26 : LABEL "provider"="Oracle" "issues"="https://github.com/oracle/docker-images/issues" "volume.setup.location1"="/opt/scripts" "volume.startup.location1"="/opt/scripts/startup" "port.listener"="1521" "port.oemexpress"="5500"

---> Running in 38596d9a815a

……

---> Running in fbb006aefde1

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

Check /u01/app/21.3.0/grid/install/root_fbb006aefde1_2022-10-05_11-13-31-659210429.log for the output of root script

Check /u01/app/oracle/product/21.3.0/dbhome_1/install/root_fbb006aefde1_2022-10-05_11-13-31-779049670.log for the output of root script

Preparing... ########################################

Updating / installing...

cvuqdisk-1.0.10-1 ########################################

Removing intermediate container fbb006aefde1

---> 98380a74fcf1

Step 23/26 : USER ${USER}

---> Running in 3d39de0dd47e

Removing intermediate container 3d39de0dd47e

---> c03fe8661c27

Step 24/26 : VOLUME ["/common_scripts"]

---> Running in ae9f2de3b766

Removing intermediate container ae9f2de3b766

---> dc3628ed0345

Step 25/26 : WORKDIR $WORKDIR

---> Running in 511736d2dc3a

Removing intermediate container 511736d2dc3a

---> 6bd0ac840e4b

Step 26/26 : ENTRYPOINT /usr/bin/$INITSH

---> Running in 1376bcd74cfb

Removing intermediate container 1376bcd74cfb

---> 023775e51a76

Successfully built 023775e51a76

Successfully tagged oracle/database-rac:21.3.0

Oracle Database Docker Image for Real Application Clusters (RAC) version 21.3.0 is ready to be extended:

--> oracle/database-rac:21.3.0

Build completed in 1513 seconds.

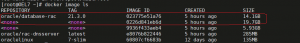

Image مربوط به oracle RAC ایجاد شده و آماده اجرا می باشد:

[root@OEL7 ~]# docker image ls REPOSITORY TAG IMAGE ID CREATED SIZE oracle/database-rac 21.3.0 023775e51a76 5 hours ago 14.1GB <none> <none> 0226d841eb6d 5 hours ago 19.7GB <none> <none> 9936f433aeb4 5 hours ago 5.93GB oracle/rac-dnsserver latest e8076b822446 5 hours ago 285MB oraclelinux 7-slim 60807cf6683b 12 days ago 135MB

قبل از اجرای container از image ایجاد شده باید فایلهای زیر را در داکر هاست ایجاد کنیم تا در دسترس همه containerها باشند.

اولین فایل rac_host_file است که قرار است فایل hosts را(برای name resolution) بین همه containerها به اشتراک بگذارد:

[root@OEL7 ~]# mkdir /opt/containers; touch /opt/containers/rac_host_file

همانطور که می دانید، در زمان نصب grid infra و نرم افزار oracle باید برای کاربر oracle و grid پسوردی را تنظیم کرده باشیم و این مسئله برای کاربران دیتابیسی هم صادق است. برای این کار، با اجرای دستورات زیر، فایل پسوردی را در داکر هاست ایجاد می کنیم تا در طول راه اندازی کلاستر، از این پسورد استفاده شود:

[root@OEL7 ~]# mkdir /opt/.secrets/ [root@OEL7 ~]# openssl rand -hex 64 -out /opt/.secrets/pwd.key [root@OEL7 ~]# echo "Welcome1" > /opt/.secrets/common_os_pwdfile [root@OEL7 ~]# openssl enc -aes-256-cbc -salt -in /opt/.secrets/common_os_pwdfile -out /opt/.secrets/common_os_pwdfile.enc -pass file:/opt/.secrets/pwd.key [root@OEL7 ~]# rm -f /opt/.secrets/common_os_pwdfile [root@OEL7 ~]# chmod 400 /opt/.secrets/common_os_pwdfile.enc; chmod 400 /opt/.secrets/pwd.key

همانطور که می دانید، برای نصب Oracle RAC نیاز به فضای مشترک بین دو نود داریم، اگر دیسک و یا پارتیشن مستقلی برای این کار در هاست دارید، نیازی به انجام این مرحله نیست در غیر این صورت می توانید با دستور dd فایلی را ایجاد کنید تا نقش این دیسک را برای شما بازی کند:

[root@OEL7 ~]# dd if=/dev/zero of=/oracle/ASM1 bs=1024 count=50388608 50388608+0 records in 50388608+0 records out 51597934592 bytes (52 GB) copied, 137.139 s, 376 MB/s [root@OEL7 ~]# dd if=/dev/zero of=/oracle/ASM2 bs=1024 count=50388608 50388608+0 records in 50388608+0 records out 51597934592 bytes (52 GB) copied, 142.893 s, 361 MB/s [root@OEL7 ~]# losetup /dev/loop0 /oracle/ASM1 [root@OEL7 ~]# losetup /dev/loop1 /oracle/ASM2

–ایجاد container

با اجرای دستور زیر، containerای با نام racnode1 ایجاد خواهیم کرد که قرار است نود اول کلاستر در این container ایجاد و اجرا شود:

[root@OEL7 ~]# docker create -t -i \ --volume /dev/shm \ > --hostname racnode1 \ > --volume /boot:/boot:ro \ > --volume /dev/shm \ --volume /opt/containers/rac_host_file:/etc/hosts \ --volume /opt/.secrets:/run/secrets:ro \ > --tmpfs /dev/shm:rw,exec,size=4G \ --cpuset-cpus 0-3 \ > --volume /opt/containers/rac_host_file:/etc/hosts \ > --volume /opt/.secrets:/run/secrets:ro \ > --volume /etc/localtime:/etc/localtime:ro \ > --cpuset-cpus 0-3 \ --dns-search=example.com \ > --memory 16G \ > --memory-swap 32G \ > --sysctl kernel.shmall=2097152 \ > --sysctl "kernel.sem=250 32000 100 128" \ > --sysctl kernel.shmmax=8589934592 \ > --sysctl kernel.shmmni=4096 \ > --dns-search=example.com \ > --dns=10.0.20.2 \ > --device=/dev/loop0:/dev/asm_disk1 \ -e VIP_HOSTNAME=racnode1-vip \ -e PRIV_IP=192.168.10.151 \ > --device=/dev/loop1:/dev/asm_disk2 \ > --privileged=false \ > --cap-add=SYS_NICE \ > --cap-add=SYS_RESOURCE \ > --cap-add=NET_ADMIN \ > -e DNS_SERVERS=10.0.20.2 \ > -e NODE_VIP=10.0.20.161 \ > -e VIP_HOSTNAME=racnode1-vip \ > -e PRIV_IP=192.168.10.151 \ > -e PRIV_HOSTNAME=racnode1-priv \ > -e PUBLIC_IP=10.0.20.151 \ > -e PUBLIC_HOSTNAME=racnode1 \ > -e SCAN_NAME=racnode-scan \ > -e OP_TYPE=INSTALL \ > -e DOMAIN=example.com \ > -e ASM_DEVICE_LIST=/dev/asm_disk1,/dev/asm_disk2 \ > -e ASM_DISCOVERY_DIR=/dev \ > -e COMMON_OS_PWD_FILE=common_os_pwdfile.enc \ > -e PWD_KEY=pwd.key \ > --restart=always --tmpfs=/run -v /sys/fs/cgroup:/sys/fs/cgroup:ro \ > --cpu-rt-runtime=95000 --ulimit rtprio=99 \ > --name racnode1 \ > oracle/database-rac:21.3.0

2b66bf78fe1eecb1868f29e29b89eddee836496363e8df41c7377fa5ecf9b736

قبل از استارت این container، آن را به دو شبکه ایجاد شده متصل می کنیم:

[root@OEL7 ~]# docker network disconnect bridge racnode1 [root@OEL7 ~]# docker network connect rac_pub_nw1 --ip 10.0.20.151 racnode1 [root@OEL7 ~]# docker network connect rac_priv_nw1 --ip 192.168.10.151 racnode1

بعد از این تنظیمات، container را استارت می کنیم:

[root@OEL7 backup]# docker start racnode1 racnode1 [root@OEL7 backup]# docker logs -f racnode1

Creating env variables file /etc/rac_env_vars

Starting Systemd

systemd 219 running in system mode. (+PAM +AUDIT +SELINUX +IMA -APPARMOR +SMACK +SYSVINIT +UTMP +LIBCRYPTSETUP +GCRYPT +GNUTLS +ACL +XZ +LZ4 -SECCOMP +BLKID +ELFUTILS +KMOD +IDN)

Detected virtualization other.

Detected architecture x86-64.

Welcome to Oracle Linux Server 7.9!

Set hostname to <racnode1>.

[/usr/lib/systemd/system/systemd-pstore.service:22] Unknown lvalue 'StateDirectory' in section 'Service'

Cannot add dependency job for unit display-manager.service, ignoring: Unit not found.

[ OK ] Started Forward Password Requests to Wall Directory Watch.

[ OK ] Reached target Local Encrypted Volumes.

[ OK ] Started Dispatch Password Requests to Console Directory Watch.

[ OK ] Reached target RPC Port Mapper.

[ OK ] Created slice Root Slice.

[ OK ] Created slice User and Session Slice.

[ OK ] Created slice System Slice.

[ OK ] Created slice system-getty.slice.

[ OK ] Reached target Slices.

[ OK ] Listening on Delayed Shutdown Socket.

[ OK ] Reached target Swap.

[ OK ] Listening on Journal Socket.

Starting Rebuild Hardware Database...

[ OK ] Reached target Local File Systems (Pre).

Starting Journal Service...

Starting Read and set NIS domainname from /etc/sysconfig/network...

Starting Configure read-only root support...

[ OK ] Listening on /dev/initctl Compatibility Named Pipe.

[ OK ] Started Read and set NIS domainname from /etc/sysconfig/network.

[ OK ] Started Journal Service.

Starting Flush Journal to Persistent Storage...

[ OK ] Started Configure read-only root support.

[ OK ] Reached target Local File Systems.

Starting Rebuild Journal Catalog...

Starting Preprocess NFS configuration...

Starting Mark the need to relabel after reboot...

Starting Load/Save Random Seed...

[ OK ] Started Flush Journal to Persistent Storage.

[ OK ] Started Mark the need to relabel after reboot.

Starting Create Volatile Files and Directories...

[ OK ] Started Rebuild Journal Catalog.

[ OK ] Started Load/Save Random Seed.

[ OK ] Started Preprocess NFS configuration.

[ OK ] Started Create Volatile Files and Directories.

Mounting RPC Pipe File System...

Starting Update UTMP about System Boot/Shutdown...

[FAILED] Failed to mount RPC Pipe File System.

See 'systemctl status var-lib-nfs-rpc_pipefs.mount' for details.

[DEPEND] Dependency failed for rpc_pipefs.target.

[DEPEND] Dependency failed for RPC security service for NFS client and server.

[ OK ] Started Update UTMP about System Boot/Shutdown.

[ OK ] Started Rebuild Hardware Database.

Starting Update is Completed...

[ OK ] Started Update is Completed.

[ OK ] Reached target System Initialization.

[ OK ] Started Flexible branding.

[ OK ] Reached target Paths.

[ OK ] Listening on RPCbind Server Activation Socket.

Starting RPC bind service...

[ OK ] Started Daily Cleanup of Temporary Directories.

[ OK ] Reached target Timers.

[ OK ] Listening on D-Bus System Message Bus Socket.

[ OK ] Reached target Sockets.

[ OK ] Reached target Basic System.

Starting OpenSSH Server Key Generation...

Starting Resets System Activity Logs...

Starting GSSAPI Proxy Daemon...

[ OK ] Started D-Bus System Message Bus.

Starting Self Monitoring and Reporting Technology (SMART) Daemon...

Starting LSB: Bring up/down networking...

Starting Login Service...

[ OK ] Started RPC bind service.

[ OK ] Started Resets System Activity Logs.

[ OK ] Started GSSAPI Proxy Daemon.

Starting Cleanup of Temporary Directories...

[ OK ] Reached target NFS client services.

[ OK ] Reached target Remote File Systems (Pre).

[ OK ] Reached target Remote File Systems.

Starting Permit User Sessions...

[ OK ] Started Cleanup of Temporary Directories.

[ OK ] Started Permit User Sessions.

[ OK ] Started Command Scheduler.

[ OK ] Started Login Service.

[ OK ] Started OpenSSH Server Key Generation.

[ OK ] Started LSB: Bring up/down networking.

[ OK ] Reached target Network.

[ OK ] Reached target Network is Online.

Starting Notify NFS peers of a restart...

Starting OpenSSH server daemon...

Starting /etc/rc.d/rc.local Compatibility...

[ OK ] Started Notify NFS peers of a restart.

[ OK ] Started /etc/rc.d/rc.local Compatibility.

[ OK ] Started Console Getty.

[ OK ] Reached target Login Prompts.

[ OK ] Started OpenSSH server daemon.

10-05-2022 14:50:53 +0330 : : Process id of the program :

10-05-2022 14:50:53 +0330 : : #################################################

10-05-2022 14:50:53 +0330 : : Starting Grid Installation

10-05-2022 14:50:53 +0330 : : #################################################

10-05-2022 14:50:53 +0330 : : Pre-Grid Setup steps are in process

10-05-2022 14:50:53 +0330 : : Process id of the program :

10-05-2022 14:50:53 +0330 : : Disable failed service var-lib-nfs-rpc_pipefs.mount

10-05-2022 14:50:53 +0330 : : Resetting Failed Services

10-05-2022 14:50:53 +0330 : : Sleeping for 60 seconds

[ OK ] Started Self Monitoring and Reporting Technology (SMART) Daemon.

[ OK ] Reached target Multi-User System.

[ OK ] Reached target Graphical Interface.

Starting Update UTMP about System Runlevel Changes...

[ OK ] Started Update UTMP about System Runlevel Changes.

Oracle Linux Server 7.9

Kernel 4.14.35-1818.3.3.el7uek.x86_64 on an x86_64

racnode1 login: 10-05-2022 14:51:53 +0330 : : Systemctl state is running!

10-05-2022 14:51:53 +0330 : : Setting correct permissions for /bin/ping

10-05-2022 14:51:53 +0330 : : Public IP is set to 10.0.20.151

10-05-2022 14:51:53 +0330 : : RAC Node PUBLIC Hostname is set to racnode1

10-05-2022 14:51:53 +0330 : : Preparing host line for racnode1

10-05-2022 14:51:53 +0330 : : Adding \n10.0.20.151\tracnode1.example.com\tracnode1 to /etc/hosts

10-05-2022 14:51:53 +0330 : : Preparing host line for racnode1-priv

10-05-2022 14:51:53 +0330 : : Adding \n192.168.10.151\tracnode1-priv.example.com\tracnode1-priv to /etc/hosts

10-05-2022 14:51:53 +0330 : : Preparing host line for racnode1-vip

10-05-2022 14:51:53 +0330 : : Adding \n10.0.20.161\tracnode1-vip.example.com\tracnode1-vip to /etc/hosts

10-05-2022 14:51:53 +0330 : : Preparing host line for racnode-scan

10-05-2022 14:51:53 +0330 : : Preapring Device list

10-05-2022 14:51:53 +0330 : : Changing Disk permission and ownership /dev/asm_disk1

10-05-2022 14:51:53 +0330 : : Changing Disk permission and ownership /dev/asm_disk2

10-05-2022 14:51:53 +0330 : : Preapring Dns Servers list

10-05-2022 14:51:53 +0330 : : Setting DNS Servers

10-05-2022 14:51:53 +0330 : : Adding nameserver 10.0.20.2 in /etc/resolv.conf.

10-05-2022 14:51:53 +0330 : : #####################################################################

10-05-2022 14:51:53 +0330 : : RAC setup will begin in 2 minutes

10-05-2022 14:51:53 +0330 : : ####################################################################

10-05-2022 14:52:23 +0330 : : ###################################################

10-05-2022 14:52:23 +0330 : : Pre-Grid Setup steps completed

10-05-2022 14:52:23 +0330 : : ###################################################

10-05-2022 14:52:23 +0330 : : Checking if grid is already configured

10-05-2022 14:52:23 +0330 : : Process id of the program :

10-05-2022 14:52:23 +0330 : : Public IP is set to 10.0.20.151

10-05-2022 14:52:23 +0330 : : RAC Node PUBLIC Hostname is set to racnode1

10-05-2022 14:52:23 +0330 : : Domain is defined to example.com

10-05-2022 14:52:23 +0330 : : Default setting of AUTO GNS VIP set to false. If you want to use AUTO GNS VIP, please pass DHCP_CONF as an env parameter set to true

10-05-2022 14:52:23 +0330 : : RAC VIP set to 10.0.20.161

10-05-2022 14:52:23 +0330 : : RAC Node VIP hostname is set to racnode1-vip

10-05-2022 14:52:23 +0330 : : SCAN_NAME name is racnode-scan

10-05-2022 14:52:23 +0330 : : SCAN PORT is set to empty string. Setting it to 1521 port.

10-05-2022 14:52:24 +0330 : : 10.0.20.171

10.0.20.173

10.0.20.172

10-05-2022 14:52:24 +0330 : : SCAN Name resolving to IP. Check Passed!

10-05-2022 14:52:24 +0330 : : SCAN_IP set to the empty string

10-05-2022 14:52:24 +0330 : : RAC Node PRIV IP is set to 192.168.10.151

10-05-2022 14:52:24 +0330 : : RAC Node private hostname is set to racnode1-priv

10-05-2022 14:52:24 +0330 : : CMAN_NAME set to the empty string

10-05-2022 14:52:24 +0330 : : CMAN_IP set to the empty string

10-05-2022 14:52:24 +0330 : : Cluster Name is not defined

10-05-2022 14:52:24 +0330 : : Cluster name is set to 'racnode-c'

10-05-2022 14:52:24 +0330 : : Password file generated

10-05-2022 14:52:24 +0330 : : Common OS Password string is set for Grid user

10-05-2022 14:52:24 +0330 : : Common OS Password string is set for Oracle user

10-05-2022 14:52:24 +0330 : : Common OS Password string is set for Oracle Database

10-05-2022 14:52:24 +0330 : : Setting CONFIGURE_GNS to false

10-05-2022 14:52:24 +0330 : : GRID_RESPONSE_FILE env variable set to empty. configGrid.sh will use standard cluster responsefile

10-05-2022 14:52:24 +0330 : : Location for User script SCRIPT_ROOT set to /common_scripts

10-05-2022 14:52:24 +0330 : : IGNORE_CVU_CHECKS is set to true

10-05-2022 14:52:24 +0330 : : Oracle SID is set to ORCLCDB

10-05-2022 14:52:24 +0330 : : Oracle PDB name is set to ORCLPDB

10-05-2022 14:52:24 +0330 : : Check passed for network card eth1 for public IP 10.0.20.151

10-05-2022 14:52:24 +0330 : : Public Netmask : 255.255.255.0

10-05-2022 14:52:24 +0330 : : Check passed for network card eth0 for private IP 192.168.10.151

10-05-2022 14:52:24 +0330 : : Building NETWORK_STRING to set networkInterfaceList in Grid Response File

10-05-2022 14:52:24 +0330 : : Network InterfaceList set to eth1:10.0.20.0:1,eth0:192.168.10.0:5

10-05-2022 14:52:24 +0330 : : Setting random password for grid user

10-05-2022 14:52:24 +0330 : : Setting random password for oracle user

10-05-2022 14:52:24 +0330 : : Calling setupSSH function

10-05-2022 14:52:24 +0330 : : SSh will be setup among racnode1 nodes

10-05-2022 14:52:24 +0330 : : Running SSH setup for grid user between nodes racnode1

10-05-2022 14:53:00 +0330 : : Running SSH setup for oracle user between nodes racnode1

10-05-2022 14:53:05 +0330 : : SSH check fine for the racnode1

10-05-2022 14:53:06 +0330 : : SSH check fine for the oracle@racnode1

10-05-2022 14:53:06 +0330 : : Preapring Device list

10-05-2022 14:53:06 +0330 : : Changing Disk permission and ownership

10-05-2022 14:53:06 +0330 : : Changing Disk permission and ownership

10-05-2022 14:53:06 +0330 : : ASM Disk size : 0

10-05-2022 14:53:06 +0330 : : ASM Device list will be with failure groups /dev/asm_disk1,,/dev/asm_disk2,

10-05-2022 14:53:06 +0330 : : ASM Device list will be groups /dev/asm_disk1,/dev/asm_disk2

10-05-2022 14:53:06 +0330 : : CLUSTER_TYPE env variable is set to STANDALONE, will not process GIMR DEVICE list as default Diskgroup is set to DATA. GIMR DEVICE List will be processed when CLUSTER_TYPE is set to DOMAIN for DSC

10-05-2022 14:53:06 +0330 : : Nodes in the cluster racnode1

10-05-2022 14:53:06 +0330 : : Setting Device permissions for RAC Install on racnode1

10-05-2022 14:53:06 +0330 : : Preapring ASM Device list

10-05-2022 14:53:06 +0330 : : Changing Disk permission and ownership

10-05-2022 14:53:06 +0330 : : Command : su - $GRID_USER -c "ssh $node sudo chown $GRID_USER:asmadmin $device" execute on racnode1

10-05-2022 14:53:06 +0330 : : Command : su - $GRID_USER -c "ssh $node sudo chmod 660 $device" execute on racnode1

10-05-2022 14:53:06 +0330 : : Populate Rac Env Vars on Remote Hosts

10-05-2022 14:53:06 +0330 : : Command : su - $GRID_USER -c "ssh $node sudo echo \"export ASM_DEVICE_LIST=${ASM_DEVICE_LIST}\" >> /etc/rac_env_vars" execute on racnode1

10-05-2022 14:53:06 +0330 : : Changing Disk permission and ownership

10-05-2022 14:53:06 +0330 : : Command : su - $GRID_USER -c "ssh $node sudo chown $GRID_USER:asmadmin $device" execute on racnode1

10-05-2022 14:53:06 +0330 : : Command : su - $GRID_USER -c "ssh $node sudo chmod 660 $device" execute on racnode1

10-05-2022 14:53:06 +0330 : : Populate Rac Env Vars on Remote Hosts

10-05-2022 14:53:06 +0330 : : Command : su - $GRID_USER -c "ssh $node sudo echo \"export ASM_DEVICE_LIST=${ASM_DEVICE_LIST}\" >> /etc/rac_env_vars" execute on racnode1

10-05-2022 14:53:06 +0330 : : Generating Reponsefile

10-05-2022 14:53:06 +0330 : : Running cluvfy Checks

10-05-2022 14:53:06 +0330 : : Performing Cluvfy Checks

10-05-2022 14:53:45 +0330 : : Checking /tmp/cluvfy_check.txt if there is any failed check.

This standalone version of CVU is "454" days old. The latest release of standalone CVU can be obtained from the Oracle support site. Refer to MOS note 2731675.1 for more details.

Performing following verification checks ...

Physical Memory ...PASSED

Available Physical Memory ...PASSED

Swap Size ...PASSED

Free Space: racnode1:/usr,racnode1:/var,racnode1:/etc,racnode1:/sbin,racnode1:/tmp ...PASSED

User Existence: grid ...

Users With Same UID: 54332 ...PASSED

User Existence: grid ...PASSED

Group Existence: asmadmin ...PASSED

Group Existence: asmdba ...PASSED

Group Existence: oinstall ...PASSED

Group Membership: asmdba ...PASSED

Group Membership: asmadmin ...PASSED

Group Membership: oinstall(Primary) ...PASSED

Run Level ...PASSED

Hard Limit: maximum open file descriptors ...PASSED

Soft Limit: maximum open file descriptors ...PASSED

Hard Limit: maximum user processes ...PASSED

Soft Limit: maximum user processes ...PASSED

Soft Limit: maximum stack size ...PASSED

Architecture ...PASSED

OS Kernel Version ...PASSED

OS Kernel Parameter: semmsl ...PASSED

OS Kernel Parameter: semmns ...PASSED

OS Kernel Parameter: semopm ...PASSED

OS Kernel Parameter: semmni ...PASSED

OS Kernel Parameter: shmmax ...PASSED

OS Kernel Parameter: shmmni ...PASSED

OS Kernel Parameter: shmall ...PASSED

OS Kernel Parameter: file-max ...PASSED

OS Kernel Parameter: ip_local_port_range ...PASSED

OS Kernel Parameter: rmem_default ...PASSED

OS Kernel Parameter: rmem_max ...PASSED

OS Kernel Parameter: wmem_default ...PASSED

OS Kernel Parameter: wmem_max ...PASSED

OS Kernel Parameter: aio-max-nr ...PASSED

OS Kernel Parameter: panic_on_oops ...PASSED

Package: kmod-20-21 (x86_64) ...PASSED

Package: kmod-libs-20-21 (x86_64) ...PASSED

Package: binutils-2.23.52.0.1 ...PASSED

Package: libgcc-4.8.2 (x86_64) ...PASSED

Package: libstdc++-4.8.2 (x86_64) ...PASSED

Package: sysstat-10.1.5 ...PASSED

Package: ksh ...PASSED

Package: make-3.82 ...PASSED

Package: glibc-2.17 (x86_64) ...PASSED

Package: glibc-devel-2.17 (x86_64) ...PASSED

Package: libaio-0.3.109 (x86_64) ...PASSED

Package: nfs-utils-1.2.3-15 ...PASSED

Package: smartmontools-6.2-4 ...PASSED

Package: net-tools-2.0-0.17 ...PASSED

Package: policycoreutils-2.5-17 ...PASSED

Package: policycoreutils-python-2.5-17 ...PASSED

Port Availability for component "Oracle Remote Method Invocation (ORMI)" ...PASSED

Port Availability for component "Oracle Notification Service (ONS)" ...PASSED

Port Availability for component "Oracle Cluster Synchronization Services (CSSD)" ...PASSED

Port Availability for component "Oracle Notification Service (ONS) Enterprise Manager support" ...PASSED

Port Availability for component "Oracle Database Listener" ...PASSED

Users With Same UID: 0 ...PASSED

Current Group ID ...PASSED

Root user consistency ...PASSED

Host name ...PASSED

Node Connectivity ...

Hosts File ...PASSED

Check that maximum (MTU) size packet goes through subnet ...PASSED

Node Connectivity ...PASSED

Multicast or broadcast check ...PASSED

ASM Network ...PASSED

Device Checks for ASM ...

Access Control List check ...PASSED

Device Checks for ASM ...PASSED

Same core file name pattern ...PASSED

User Mask ...PASSED

User Not In Group "root": grid ...PASSED

Time zone consistency ...PASSED

Path existence, ownership, permissions and attributes ...

Path "/var" ...PASSED

Path "/var/lib/oracle" ...PASSED

Path "/dev/shm" ...PASSED

Path existence, ownership, permissions and attributes ...PASSED

VIP Subnet configuration check ...PASSED

resolv.conf Integrity ...PASSED

DNS/NIS name service ...

Name Service Switch Configuration File Integrity ...PASSED

DNS/NIS name service ...PASSED

Single Client Access Name (SCAN) ...PASSED

Domain Sockets ...PASSED

Daemon "avahi-daemon" not configured and running ...PASSED

Daemon "proxyt" not configured and running ...PASSED

loopback network interface address ...PASSED

Oracle base: /u01/app/grid ...

'/u01/app/grid' ...PASSED

Oracle base: /u01/app/grid ...PASSED

User Equivalence ...PASSED

RPM Package Manager database ...INFORMATION (PRVG-11250)

Network interface bonding status of private interconnect network interfaces ...PASSED

/dev/shm mounted as temporary file system ...PASSED

File system mount options for path /var ...PASSED

DefaultTasksMax parameter ...PASSED

zeroconf check ...PASSED

ASM Filter Driver configuration ...PASSED

Systemd login manager IPC parameter ...PASSED

Systemd status ...PASSED

Pre-check for cluster services setup was successful.

RPM Package Manager database ...INFORMATION

PRVG-11250 : The check "RPM Package Manager database" was not performed because

it needs 'root' user privileges.

Refer to My Oracle Support notes "2548970.1" for more details regarding errors

PRVG-11250".

CVU operation performed: stage -pre crsinst

Date: Oct 5, 2022 11:23:07 AM

CVU home: /u01/app/21.3.0/grid

User: grid

Operating system: Linux4.14.35-1818.3.3.el7uek.x86_64

10-05-2022 14:53:45 +0330 : : CVU Checks are ignored as IGNORE_CVU_CHECKS set to true. It is recommended to set IGNORE_CVU_CHECKS to false and meet all the cvu checks requirement. RAC installation might fail, if there are failed cvu checks.

10-05-2022 14:53:45 +0330 : : Running Grid Installation

10-05-2022 14:54:38 +0330 : : Running root.sh

10-05-2022 14:54:38 +0330 : : Nodes in the cluster racnode1

10-05-2022 14:54:38 +0330 : : Running root.sh on racnode1

10-05-2022 15:02:34 +0330 : : Running post root.sh steps

10-05-2022 15:02:34 +0330 : : Running post root.sh steps to setup Grid env

10-05-2022 15:03:32 +0330 : : Checking Cluster Status

10-05-2022 15:03:32 +0330 : : Nodes in the cluster

10-05-2022 15:03:32 +0330 : : Removing /tmp/cluvfy_check.txt as cluster check has passed

10-05-2022 15:03:32 +0330 : : Generating DB Responsefile Running DB creation

10-05-2022 15:03:32 +0330 : : Running DB creation

10-05-2022 15:03:33 +0330 : : Workaround for Bug 32449232 : Removing /u01/app/grid/kfod

10-05-2022 15:28:54 +0330 : : Checking DB status

10-05-2022 15:28:55 +0330 : : #################################################################

10-05-2022 15:28:55 +0330 : : Oracle Database ORCLCDB is up and running on racnode1

10-05-2022 15:28:55 +0330 : : #################################################################

10-05-2022 15:28:55 +0330 : : Running User Script

10-05-2022 15:28:55 +0330 : : Setting Remote Listener

10-05-2022 15:28:55 +0330 : : ####################################

10-05-2022 15:28:55 +0330 : : ORACLE RAC DATABASE IS READY TO USE!

10-05-2022 15:28:55 +0330 : : ####################################

کلاستر با یک نود در کانتینر racnode1 در حال اجرا است:

grid 20492 1 0 14:59 ? 00:00:00 asm_pmon_+ASM1 oracle 51242 1 0 15:10 ? 00:00:00 ora_pmon_ORCLCDB1

[grid@racnode1 ~]$ crsctl stat res -t

--------------------------------------------------------------------- -----------

Name Target State Server State de tails

--------------------------------------------------------------------- -----------

Local Resources

--------------------------------------------------------------------- -----------

ora.LISTENER.lsnr

ONLINE ONLINE racnode1 STABLE

ora.chad

ONLINE ONLINE racnode1 STABLE

ora.net1.network

ONLINE ONLINE racnode1 STABLE

ora.ons

ONLINE ONLINE racnode1 STABLE

--------------------------------------------------------------------- -----------

Cluster Resources

--------------------------------------------------------------------- -----------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE racnode1 STABLE

ora.DATA.dg(ora.asmgroup)

1 ONLINE ONLINE racnode1 STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE racnode1 STABLE

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE racnode1 STABLE

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE racnode1 STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE racnode1 Started, STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE racnode1 STABLE

ora.cdp1.cdp

1 ONLINE ONLINE racnode1 STABLE

ora.cdp2.cdp

1 ONLINE ONLINE racnode1 STABLE

ora.cdp3.cdp

1 ONLINE ONLINE racnode1 STABLE

ora.cvu

1 ONLINE ONLINE racnode1 STABLE

ora.orclcdb.db

1 ONLINE ONLINE racnode1 Open,HOME=/u01/app/oracle/product/21.3.0/dbhome_1,STABLE

ora.orclcdb.orclpdb.pdb

1 ONLINE ONLINE racnode1 STABLE

ora.qosmserver

1 ONLINE ONLINE racnode1 STABLE

ora.racnode1.vip

1 ONLINE ONLINE racnode1 STABLE

ora.scan1.vip

1 ONLINE ONLINE racnode1 STABLE

ora.scan2.vip

1 ONLINE ONLINE racnode1 STABLE

ora.scan3.vip

1 ONLINE ONLINE racnode1 STABLE

همانطور که می بینید، دیتابیس، orclcdb هم با pdbای به نام orclpdb ایجاد شده است:

[oracle@racnode1 ~]$ export ORACLE_HOME=/u01/app/oracle/product/21.3.0/dbhome_1 [oracle@racnode1 ~]$ export ORACLE_SID=ORCLCDB1 [oracle@racnode1 ~]$ sqlplus "/as sysdba"

SQL*Plus: Release 21.0.0.0.0 - Production on Wed Oct 5 19:53:46 2022

Version 21.3.0.0.0

Copyright (c) 1982, 2021, Oracle. All rights reserved.

Connected to:

Oracle Database 21c Enterprise Edition Release 21.0.0.0.0 - Production

Version 21.3.0.0.0

SQL> show parameter sga

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

sga_max_size big integer 3760M

sga_target big integer 3760M

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 ORCLPDB READ WRITE NO

SQL> show parameter cluster_data

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

cluster_database boolean TRUE

اضافه کردن نود دوم

مراحل انجام شده برای ایجاد container نود اول را در این قسمت برای اصافه کردن نود دوم تکرار می کنیم. این کار در پس زمینه با اجرای اسکریپت addnode انجام خواهد شد:

[root@OEL7 ~]# docker create -t -i \ --volume /dev/shm \ > --hostname racnode2 \ --tmpfs /dev/shm:rw,exec,size=4G \ > --volume /boot:/boot:ro \ > --volume /dev/shm \ > --tmpfs /dev/shm:rw,exec,size=4G \ > --volume /opt/containers/rac_host_file:/etc/hosts \ > --volume /opt/.secrets:/run/secrets:ro \ > --volume /etc/localtime:/etc/localtime:ro \ > --cpuset-cpus 4-7 \ > --memory 16G \ > --memory-swap 32G \ > --sysctl kernel.shmall=2097152 \ > --sysctl "kernel.sem=250 32000 100 128" \ > --sysctl kernel.shmmax=8589934592 \ > --sysctl kernel.shmmni=4096 \ > --dns-search=example.com \ > --dns=10.0.20.2 \ > --device=/dev/loop0:/dev/asm_disk1 \ > --device=/dev/loop1:/dev/asm_disk2 \ > --privileged=false \ -e DNS_SERVERS=10.0.20.2 \ > --cap-add=SYS_NICE \ > --cap-add=SYS_RESOURCE \ > --cap-add=NET_ADMIN \ -e PRIV_IP=192.168.10.152 \ -e PRIV_HOSTNAME=racnode2-priv \ > -e EXISTING_CLS_NODES=racnode1 \ > -e DNS_SERVERS=10.0.20.2 \ > -e NODE_VIP=10.0.20.162 \ > -e VIP_HOSTNAME=racnode2-vip \ > -e PRIV_IP=192.168.10.152 \ > -e PRIV_HOSTNAME=racnode2-priv \ > -e PUBLIC_IP=10.0.20.152 \ > -e PUBLIC_HOSTNAME=racnode2 \ > -e SCAN_NAME=racnode-scan \ > -e OP_TYPE=ADDNODE \ > -e DOMAIN=example.com \ > -e ASM_DEVICE_LIST=/dev/asm_disk1,/dev/asm_disk2 \ > -e ASM_DISCOVERY_DIR=/dev \ > -e ORACLE_SID=ORCLCDB \ > -e COMMON_OS_PWD_FILE=common_os_pwdfile.enc \ > -e PWD_KEY=pwd.key \ > --restart=always --tmpfs=/run -v /sys/fs/cgroup:/sys/fs/cgroup:ro \ > --cpu-rt-runtime=95000 --ulimit rtprio=99 \ > --name racnode2 \ > oracle/database-rac:21.3.0

0dfa77c7f8f8b5e3bc1d5b2b2739448743dcb98a0e660ede087281b6a6aa0324

[root@OEL7 ~]# docker network disconnect bridge racnode2 [root@OEL7 ~]# docker network connect rac_pub_nw1 --ip 10.0.20.152 racnode2 [root@OEL7 ~]# docker network connect rac_priv_nw1 --ip 192.168.10.152 racnode2 [root@OEL7 ~]# docker start racnode2 racnode2 [root@OEL7 ~]# docker logs racnode2 -f

Creating env variables file /etc/rac_env_vars

Starting Systemd

systemd 219 running in system mode. (+PAM +AUDIT +SELINUX +IMA -APPARMOR +SMACK +SYSVINIT +UTMP +LIBCRYPTSETUP +GCRYPT +GNUTLS +ACL +XZ +LZ4 -SECCOMP +BLKID +ELFUTILS +KMOD +IDN)

Detected virtualization other.

Detected architecture x86-64.

Welcome to Oracle Linux Server 7.9!

Set hostname to <racnode2>.

[/usr/lib/systemd/system/systemd-pstore.service:22] Unknown lvalue 'StateDirectory' in section 'Service'

Cannot add dependency job for unit display-manager.service, ignoring: Unit not found.

[ OK ] Reached target Local Encrypted Volumes.

[ OK ] Created slice Root Slice.

[ OK ] Listening on /dev/initctl Compatibility Named Pipe.

[ OK ] Started Dispatch Password Requests to Console Directory Watch.

[ OK ] Reached target RPC Port Mapper.

[ OK ] Listening on Delayed Shutdown Socket.

[ OK ] Listening on Journal Socket.

[ OK ] Created slice User and Session Slice.

[ OK ] Started Forward Password Requests to Wall Directory Watch.

[ OK ] Created slice System Slice.

Starting Journal Service...

Starting Read and set NIS domainname from /etc/sysconfig/network...

Starting Configure read-only root support...

Starting Rebuild Hardware Database...

[ OK ] Reached target Slices.

[ OK ] Reached target Local File Systems (Pre).

[ OK ] Created slice system-getty.slice.

[ OK ] Reached target Swap.

[ OK ] Started Journal Service.

[ OK ] Started Read and set NIS domainname from /etc/sysconfig/network.

Starting Flush Journal to Persistent Storage...

[ OK ] Started Configure read-only root support.

[ OK ] Reached target Local File Systems.

Starting Mark the need to relabel after reboot...

Starting Preprocess NFS configuration...

Starting Rebuild Journal Catalog...

Starting Load/Save Random Seed...

[ OK ] Started Load/Save Random Seed.

[ OK ] Started Mark the need to relabel after reboot.

[ OK ] Started Preprocess NFS configuration.

[ OK ] Started Flush Journal to Persistent Storage.

Starting Create Volatile Files and Directories...

[ OK ] Started Rebuild Journal Catalog.

[ OK ] Started Create Volatile Files and Directories.

Mounting RPC Pipe File System...

Starting Update UTMP about System Boot/Shutdown...

[FAILED] Failed to mount RPC Pipe File System.

See 'systemctl status var-lib-nfs-rpc_pipefs.mount' for details.

[DEPEND] Dependency failed for rpc_pipefs.target.

[DEPEND] Dependency failed for RPC security service for NFS client and server.

[ OK ] Started Update UTMP about System Boot/Shutdown.

[ OK ] Started Rebuild Hardware Database.

Starting Update is Completed...

[ OK ] Started Update is Completed.

[ OK ] Reached target System Initialization.

[ OK ] Listening on D-Bus System Message Bus Socket.

[ OK ] Started Daily Cleanup of Temporary Directories.

[ OK ] Reached target Timers.

[ OK ] Listening on RPCbind Server Activation Socket.

Starting RPC bind service...

[ OK ] Reached target Sockets.

[ OK ] Started Flexible branding.

[ OK ] Reached target Paths.

[ OK ] Reached target Basic System.

Starting LSB: Bring up/down networking...

[ OK ] Started D-Bus System Message Bus.

Starting Self Monitoring and Reporting Technology (SMART) Daemon...

Starting Login Service...

Starting Resets System Activity Logs...

Starting GSSAPI Proxy Daemon...

Starting OpenSSH Server Key Generation...

[ OK ] Started RPC bind service.

Starting Cleanup of Temporary Directories...

[ OK ] Started Cleanup of Temporary Directories.

[ OK ] Started Login Service.

[ OK ] Started Resets System Activity Logs.

[ OK ] Started GSSAPI Proxy Daemon.

[ OK ] Reached target NFS client services.

[ OK ] Reached target Remote File Systems (Pre).

[ OK ] Reached target Remote File Systems.

Starting Permit User Sessions...

[ OK ] Started Permit User Sessions.

[ OK ] Started Command Scheduler.

[ OK ] Started OpenSSH Server Key Generation.

[ OK ] Started LSB: Bring up/down networking.

[ OK ] Reached target Network.

Starting OpenSSH server daemon...

Starting /etc/rc.d/rc.local Compatibility...

[ OK ] Reached target Network is Online.

Starting Notify NFS peers of a restart...

[ OK ] Started Notify NFS peers of a restart.

[ OK ] Started OpenSSH server daemon.

[ OK ] Started /etc/rc.d/rc.local Compatibility.

[ OK ] Started Console Getty.

[ OK ] Reached target Login Prompts.

10-05-2022 15:29:44 +0330 : : Process id of the program :

10-05-2022 15:29:44 +0330 : : #################################################

10-05-2022 15:29:44 +0330 : : Starting Grid Installation

10-05-2022 15:29:44 +0330 : : #################################################

10-05-2022 15:29:44 +0330 : : Pre-Grid Setup steps are in process

10-05-2022 15:29:44 +0330 : : Process id of the program :

10-05-2022 15:29:44 +0330 : : Disable failed service var-lib-nfs-rpc_pipefs.mount

10-05-2022 15:29:44 +0330 : : Resetting Failed Services

10-05-2022 15:29:44 +0330 : : Sleeping for 60 seconds

[ OK ] Started Self Monitoring and Reporting Technology (SMART) Daemon.

[ OK ] Reached target Multi-User System.

[ OK ] Reached target Graphical Interface.

Starting Update UTMP about System Runlevel Changes...

[ OK ] Started Update UTMP about System Runlevel Changes.

Oracle Linux Server 7.9

Kernel 4.14.35-1818.3.3.el7uek.x86_64 on an x86_64

racnode2 login: 10-05-2022 15:30:44 +0330 : : Systemctl state is running!

10-05-2022 15:30:44 +0330 : : Setting correct permissions for /bin/ping

10-05-2022 15:30:44 +0330 : : Public IP is set to 10.0.20.152

10-05-2022 15:30:44 +0330 : : RAC Node PUBLIC Hostname is set to racnode2

10-05-2022 15:30:44 +0330 : : Preparing host line for racnode2

10-05-2022 15:30:44 +0330 : : Adding \n10.0.20.152\tracnode2.example.com\tracnode2 to /etc/hosts

10-05-2022 15:30:44 +0330 : : Preparing host line for racnode2-priv

10-05-2022 15:30:44 +0330 : : Adding \n192.168.10.152\tracnode2-priv.example.com\tracnode2-priv to /etc/hosts

10-05-2022 15:30:44 +0330 : : Preparing host line for racnode2-vip

10-05-2022 15:30:44 +0330 : : Adding \n10.0.20.162\tracnode2-vip.example.com\tracnode2-vip to /etc/hosts

10-05-2022 15:30:44 +0330 : : Preparing host line for racnode-scan

10-05-2022 15:30:44 +0330 : : Preapring Device list

10-05-2022 15:30:44 +0330 : : Changing Disk permission and ownership /dev/asm_disk1

10-05-2022 15:30:44 +0330 : : Changing Disk permission and ownership /dev/asm_disk2

10-05-2022 15:30:44 +0330 : : Preapring Dns Servers list

10-05-2022 15:30:44 +0330 : : Setting DNS Servers

10-05-2022 15:30:44 +0330 : : Adding nameserver 10.0.20.2 in /etc/resolv.conf.

10-05-2022 15:30:44 +0330 : : #####################################################################

10-05-2022 15:30:44 +0330 : : RAC setup will begin in 2 minutes

10-05-2022 15:30:44 +0330 : : ####################################################################

10-05-2022 15:31:14 +0330 : : ###################################################

10-05-2022 15:31:14 +0330 : : Pre-Grid Setup steps completed

10-05-2022 15:31:14 +0330 : : ###################################################

10-05-2022 15:31:14 +0330 : : Checking if grid is already configured

10-05-2022 15:31:14 +0330 : : Public IP is set to 10.0.20.152

10-05-2022 15:31:14 +0330 : : RAC Node PUBLIC Hostname is set to racnode2

10-05-2022 15:31:14 +0330 : : Domain is defined to example.com

10-05-2022 15:31:14 +0330 : : Setting Existing Cluster Node for node addition operation. This will be retrieved from racnode1

10-05-2022 15:31:14 +0330 : : Existing Node Name of the cluster is set to racnode1

10-05-2022 15:31:14 +0330 : : 10.0.20.151

10-05-2022 15:31:14 +0330 : : Existing Cluster node resolved to IP. Check passed

10-05-2022 15:31:14 +0330 : : Default setting of AUTO GNS VIP set to false. If you want to use AUTO GNS VIP, please pass DHCP_CONF as an env parameter set to true

10-05-2022 15:31:14 +0330 : : RAC VIP set to 10.0.20.162

10-05-2022 15:31:14 +0330 : : RAC Node VIP hostname is set to racnode2-vip

10-05-2022 15:31:14 +0330 : : SCAN_NAME name is racnode-scan

10-05-2022 15:31:14 +0330 : : 10.0.20.171

10.0.20.173

10.0.20.172

10-05-2022 15:31:14 +0330 : : SCAN Name resolving to IP. Check Passed!

10-05-2022 15:31:14 +0330 : : SCAN_IP set to the empty string

10-05-2022 15:31:14 +0330 : : RAC Node PRIV IP is set to 192.168.10.152

10-05-2022 15:31:14 +0330 : : RAC Node private hostname is set to racnode2-priv

10-05-2022 15:31:14 +0330 : : CMAN_NAME set to the empty string

10-05-2022 15:31:14 +0330 : : CMAN_IP set to the empty string

10-05-2022 15:31:14 +0330 : : Password file generated

10-05-2022 15:31:14 +0330 : : Common OS Password string is set for Grid user

10-05-2022 15:31:14 +0330 : : Common OS Password string is set for Oracle user

10-05-2022 15:31:14 +0330 : : GRID_RESPONSE_FILE env variable set to empty. AddNode.sh will use standard cluster responsefile

10-05-2022 15:31:14 +0330 : : Location for User script SCRIPT_ROOT set to /common_scripts

10-05-2022 15:31:14 +0330 : : ORACLE_SID is set to ORCLCDB

10-05-2022 15:31:14 +0330 : : Setting random password for root/grid/oracle user

10-05-2022 15:31:14 +0330 : : Setting random password for grid user

10-05-2022 15:31:14 +0330 : : Setting random password for oracle user

10-05-2022 15:31:14 +0330 : : Setting random password for root user

10-05-2022 15:31:14 +0330 : : Cluster Nodes are racnode1 racnode2

10-05-2022 15:31:14 +0330 : : Running SSH setup for grid user between nodes racnode1 racnode2

10-05-2022 15:31:26 +0330 : : Running SSH setup for oracle user between nodes racnode1 racnode2

10-05-2022 15:31:38 +0330 : : SSH check fine for the racnode1

10-05-2022 15:31:38 +0330 : : SSH check fine for the racnode2

10-05-2022 15:31:38 +0330 : : SSH check fine for the racnode2

10-05-2022 15:31:38 +0330 : : SSH check fine for the oracle@racnode1

10-05-2022 15:31:38 +0330 : : SSH check fine for the oracle@racnode2

10-05-2022 15:31:38 +0330 : : SSH check fine for the oracle@racnode2

10-05-2022 15:31:38 +0330 : : Setting Device permission to grid and asmadmin on all the cluster nodes

10-05-2022 15:31:38 +0330 : : Nodes in the cluster racnode2

10-05-2022 15:31:38 +0330 : : Setting Device permissions for RAC Install on racnode2

10-05-2022 15:31:38 +0330 : : Preapring ASM Device list

10-05-2022 15:31:38 +0330 : : Changing Disk permission and ownership

10-05-2022 15:31:38 +0330 : : Command : su - $GRID_USER -c "ssh $node sudo chown $GRID_USER:asmadmin $device" execute on racnode2

10-05-2022 15:31:39 +0330 : : Command : su - $GRID_USER -c "ssh $node sudo chmod 660 $device" execute on racnode2

10-05-2022 15:31:39 +0330 : : Populate Rac Env Vars on Remote Hosts

10-05-2022 15:31:39 +0330 : : Command : su - $GRID_USER -c "ssh $node sudo echo \"export ASM_DEVICE_LIST=${ASM_DEVICE_LIST}\" >> /etc/rac_env_vars" execute on racnode2

10-05-2022 15:31:39 +0330 : : Changing Disk permission and ownership

10-05-2022 15:31:39 +0330 : : Command : su - $GRID_USER -c "ssh $node sudo chown $GRID_USER:asmadmin $device" execute on racnode2

10-05-2022 15:31:39 +0330 : : Command : su - $GRID_USER -c "ssh $node sudo chmod 660 $device" execute on racnode2

10-05-2022 15:31:39 +0330 : : Populate Rac Env Vars on Remote Hosts

10-05-2022 15:31:39 +0330 : : Command : su - $GRID_USER -c "ssh $node sudo echo \"export ASM_DEVICE_LIST=${ASM_DEVICE_LIST}\" >> /etc/rac_env_vars" execute on racnode2

10-05-2022 15:31:39 +0330 : : Checking Cluster Status on racnode1

10-05-2022 15:31:39 +0330 : : Checking Cluster

10-05-2022 15:31:39 +0330 : : Cluster Check on remote node passed

10-05-2022 15:31:40 +0330 : : Cluster Check went fine

10-05-2022 15:31:40 +0330 : : CRSD Check went fine

10-05-2022 15:31:40 +0330 : : CSSD Check went fine

10-05-2022 15:31:40 +0330 : : EVMD Check went fine

10-05-2022 15:31:40 +0330 : : Generating Responsefile for node addition

10-05-2022 15:31:40 +0330 : : Clustered Nodes are set to racnode2:racnode2-vip:HUB

10-05-2022 15:31:40 +0330 : : Running Cluster verification utility for new node racnode2 on racnode1

10-05-2022 15:31:40 +0330 : : Nodes in the cluster racnode2

10-05-2022 15:31:40 +0330 : : ssh to the node racnode1 and executing cvu checks on racnode2

10-05-2022 15:32:33 +0330 : : Checking /tmp/cluvfy_check.txt if there is any failed check.

This software is "454" days old. It is a best practice to update the CRS home by downloading and applying the latest release update. Refer to MOS note 2731675.1 for more details.

Performing following verification checks ...

Physical Memory ...PASSED

Available Physical Memory ...PASSED

Swap Size ...PASSED

Free Space: racnode2:/usr,racnode2:/var,racnode2:/etc,racnode2:/u01/app/21.3.0/grid,racnode2:/sbin,racnode2:/tmp ...PASSED

Free Space: racnode1:/usr,racnode1:/var,racnode1:/etc,racnode1:/u01/app/21.3.0/grid,racnode1:/sbin,racnode1:/tmp ...PASSED

User Existence: oracle ...

Users With Same UID: 54321 ...PASSED

User Existence: oracle ...PASSED

User Existence: grid ...

Users With Same UID: 54332 ...PASSED

User Existence: grid ...PASSED

User Existence: root ...

Users With Same UID: 0 ...PASSED

User Existence: root ...PASSED

Group Existence: asmadmin ...PASSED

Group Existence: asmoper ...PASSED

Group Existence: asmdba ...PASSED

Group Existence: oinstall ...PASSED

Group Membership: oinstall ...PASSED

Group Membership: asmdba ...PASSED

Group Membership: asmadmin ...PASSED

Group Membership: asmoper ...PASSED

Run Level ...PASSED

Hard Limit: maximum open file descriptors ...PASSED

Soft Limit: maximum open file descriptors ...PASSED

Hard Limit: maximum user processes ...PASSED

Soft Limit: maximum user processes ...PASSED

Soft Limit: maximum stack size ...PASSED

Architecture ...PASSED

OS Kernel Version ...PASSED

OS Kernel Parameter: semmsl ...PASSED

OS Kernel Parameter: semmns ...PASSED

OS Kernel Parameter: semopm ...PASSED

OS Kernel Parameter: semmni ...PASSED

OS Kernel Parameter: shmmax ...PASSED

OS Kernel Parameter: shmmni ...PASSED

OS Kernel Parameter: shmall ...PASSED

OS Kernel Parameter: file-max ...PASSED

OS Kernel Parameter: ip_local_port_range ...PASSED

OS Kernel Parameter: rmem_default ...PASSED

OS Kernel Parameter: rmem_max ...PASSED

OS Kernel Parameter: wmem_default ...PASSED

OS Kernel Parameter: wmem_max ...PASSED

OS Kernel Parameter: aio-max-nr ...PASSED

OS Kernel Parameter: panic_on_oops ...PASSED

Package: kmod-20-21 (x86_64) ...PASSED

Package: kmod-libs-20-21 (x86_64) ...PASSED

Package: binutils-2.23.52.0.1 ...PASSED

Package: libgcc-4.8.2 (x86_64) ...PASSED

Package: libstdc++-4.8.2 (x86_64) ...PASSED

Package: sysstat-10.1.5 ...PASSED

Package: ksh ...PASSED

Package: make-3.82 ...PASSED

Package: glibc-2.17 (x86_64) ...PASSED

Package: glibc-devel-2.17 (x86_64) ...PASSED

Package: libaio-0.3.109 (x86_64) ...PASSED

Package: nfs-utils-1.2.3-15 ...PASSED

Package: smartmontools-6.2-4 ...PASSED

Package: net-tools-2.0-0.17 ...PASSED

Package: policycoreutils-2.5-17 ...PASSED

Package: policycoreutils-python-2.5-17 ...PASSED

Users With Same UID: 0 ...PASSED

Current Group ID ...PASSED

Root user consistency ...PASSED

Node Addition ...

CRS Integrity ...PASSED

Clusterware Version Consistency ...PASSED

'/u01/app/21.3.0/grid' ...PASSED

Node Addition ...PASSED

Host name ...PASSED

Node Connectivity ...

Hosts File ...PASSED

Check that maximum (MTU) size packet goes through subnet ...PASSED

subnet mask consistency for subnet "10.0.20.0" ...PASSED

subnet mask consistency for subnet "192.168.10.0" ...PASSED

Node Connectivity ...PASSED

Multicast or broadcast check ...PASSED

ASM Network ...PASSED

Device Checks for ASM ...

Access Control List check ...PASSED

Device Checks for ASM ...PASSED

Database home availability ...PASSED

OCR Integrity ...PASSED

Time zone consistency ...PASSED

User Not In Group "root": grid ...PASSED

Time offset between nodes ...PASSED

resolv.conf Integrity ...PASSED

DNS/NIS name service ...PASSED

User Equivalence ...PASSED

Software home: /u01/app/21.3.0/grid ...PASSED

/dev/shm mounted as temporary file system ...PASSED

zeroconf check ...PASSED

Pre-check for node addition was successful.

CVU operation performed: stage -pre nodeadd

Date: Oct 5, 2022 12:01:41 PM

Clusterware version: 21.0.0.0.0

CVU home: /u01/app/21.3.0/grid

Grid home: /u01/app/21.3.0/grid

User: grid

Operating system: Linux4.14.35-1818.3.3.el7uek.x86_64

10-05-2022 15:32:33 +0330 : : CVU Checks are ignored as IGNORE_CVU_CHECKS set to true. It is recommended to set IGNORE_CVU_CHECKS to false and meet all the cvu checks requirement. RAC installation might fail, if there are failed cvu checks.

10-05-2022 15:32:33 +0330 : : Running Node Addition and cluvfy test for node racnode2

10-05-2022 15:32:33 +0330 : : Copying /tmp/grid_addnode_21c.rsp on remote node racnode1

10-05-2022 15:32:33 +0330 : : Running GridSetup.sh on racnode1 to add the node to existing cluster

10-05-2022 15:33:28 +0330 : : Node Addition performed. removing Responsefile

10-05-2022 15:33:28 +0330 : : Running root.sh on node racnode2

10-05-2022 15:33:28 +0330 : : Nodes in the cluster racnode2

10-05-2022 15:37:01 +0330 : : Checking Cluster

10-05-2022 15:37:01 +0330 : : Cluster Check passed

10-05-2022 15:37:01 +0330 : : Cluster Check went fine

10-05-2022 15:37:01 +0330 : : CRSD Check went fine

10-05-2022 15:37:01 +0330 : : CSSD Check went fine

10-05-2022 15:37:01 +0330 : : EVMD Check went fine

10-05-2022 15:37:01 +0330 : : Removing /tmp/cluvfy_check.txt as cluster check has passed

10-05-2022 15:37:01 +0330 : : Checking Cluster Class

10-05-2022 15:37:01 +0330 : : Checking Cluster Class

10-05-2022 15:37:01 +0330 : : Cluster class is CRS-41008: Cluster class is 'Standalone Cluster'

10-05-2022 15:37:01 +0330 : : Performing DB Node addition

10-05-2022 15:37:38 +0330 : : Node Addition went fine for racnode2

10-05-2022 15:37:38 +0330 : : Running root.sh

10-05-2022 15:37:38 +0330 : : Nodes in the cluster racnode2

10-05-2022 15:37:38 +0330 : : Adding DB Instance

10-05-2022 15:37:38 +0330 : : Adding DB Instance on racnode1

10-05-2022 15:39:35 +0330 : : Checking DB status

10-05-2022 15:39:35 +0330 : : #################################################################

10-05-2022 15:39:35 +0330 : : Oracle Database ORCLCDB is up and running on racnode2

10-05-2022 15:39:35 +0330 : : #################################################################

10-05-2022 15:39:35 +0330 : : Running User Script

10-05-2022 15:39:35 +0330 : : Setting Remote Listener

10-05-2022 15:39:35 +0330 : : ####################################

10-05-2022 15:39:35 +0330 : : ORACLE RAC DATABASE IS READY TO USE!

10-05-2022 15:39:35 +0330 : : ####################################

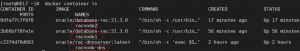

نود دوم هم به کلاستر اضافه شده است در قسمت زیر وضعیت این نود را مشاهده می کنید:

[root@OEL7 ~]# docker container ls CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 0dfa77c7f8f8 oracle/database-rac:21.3.0 "/bin/sh -c /usr/bin…" 17 minutes ago Up 17 minutes racnode2 2b66bf78fe1e oracle/database-rac:21.3.0 "/bin/sh -c /usr/bin…" 56 minutes ago Up 56 minutes racnode1 c2374d70d683 oracle/rac-dnsserver:latest "/bin/sh -c 'exec $S…" 2 hours ago Up 2 hours racnode-dns

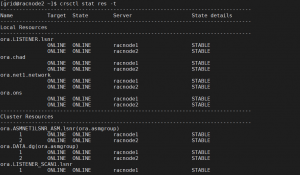

همچنین با اتصال به container مربوط به نود دوم، خواهیم دید که resourceهای کلاستر به درستی در حال اجرا می باشد:

[root@OEL7 ~]# docker exec -it racnode2 /bin/bash bash-4.2# su - grid [grid@racnode2 ~]$ crsctl stat res –t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE racnode1 STABLE

ONLINE ONLINE racnode2 STABLE

ora.chad

ONLINE ONLINE racnode1 STABLE

ONLINE ONLINE racnode2 STABLE

ora.net1.network

ONLINE ONLINE racnode1 STABLE

ONLINE ONLINE racnode2 STABLE

ora.ons

ONLINE ONLINE racnode1 STABLE

ONLINE ONLINE racnode2 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE racnode1 STABLE

2 ONLINE ONLINE racnode2 STABLE

ora.DATA.dg(ora.asmgroup)

1 ONLINE ONLINE racnode1 STABLE

2 ONLINE ONLINE racnode2 STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE racnode1 STABLE

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE racnode1 STABLE

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE racnode2 STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE racnode1 Started,STABLE

2 ONLINE ONLINE racnode2 Started,STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE racnode1 STABLE

2 ONLINE ONLINE racnode2 STABLE

ora.cdp1.cdp

1 ONLINE ONLINE racnode1 STABLE

ora.cdp2.cdp

1 ONLINE ONLINE racnode1 STABLE

ora.cdp3.cdp

1 ONLINE ONLINE racnode2 STABLE

ora.cvu

1 ONLINE ONLINE racnode1 STABLE

ora.orclcdb.db

1 ONLINE ONLINE racnode1 Open,HOME=/u01/app/o

racle/product/21.3.0

/dbhome_1,STABLE

2 ONLINE ONLINE racnode2 Open,HOME=/u01/app/o

racle/product/21.3.0

/dbhome_1,STABLE

ora.orclcdb.orclpdb.pdb

1 ONLINE ONLINE racnode1 STABLE

2 ONLINE ONLINE racnode2 STABLE

ora.qosmserver

1 ONLINE ONLINE racnode1 STABLE

ora.racnode1.vip

1 ONLINE ONLINE racnode1 STABLE

ora.racnode2.vip

1 ONLINE ONLINE racnode2 STABLE

ora.scan1.vip

1 ONLINE ONLINE racnode1 STABLE

ora.scan2.vip

1 ONLINE ONLINE racnode1 STABLE

ora.scan3.vip

1 ONLINE ONLINE racnode2 STABLE

--------------------------------------------------------------------------------

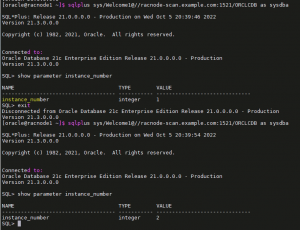

همچنین امکان اتصال به دیتابیس از طریق scan ip هم وجود دارد:

[oracle@racnode1 ~]$ sqlplus sys/Welcome1@//racnode-scan.example.com:1521/ORCLCDB as sysdba SQL*Plus: Release 21.0.0.0.0 - Production on Wed Oct 5 20:39:46 2022 Version 21.3.0.0.0 Copyright (c) 1982, 2021, Oracle. All rights reserved. Connected to: Oracle Database 21c Enterprise Edition Release 21.0.0.0.0 - Production Version 21.3.0.0.0 SQL> show parameter instance_number NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ instance_number integer 1 SQL> exit Disconnected from Oracle Database 21c Enterprise Edition Release 21.0.0.0.0 - Production Version 21.3.0.0.0 [oracle@racnode1 ~]$ sqlplus sys/Welcome1@//racnode-scan.example.com:1521/ORCLCDB as sysdba SQL*Plus: Release 21.0.0.0.0 - Production on Wed Oct 5 20:39:54 2022 Version 21.3.0.0.0 Copyright (c) 1982, 2021, Oracle. All rights reserved. Connected to: Oracle Database 21c Enterprise Edition Release 21.0.0.0.0 - Production Version 21.3.0.0.0 SQL> show parameter instance_number NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ instance_number integer 2

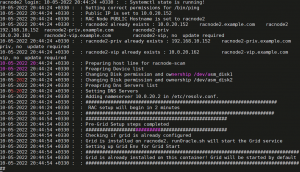

با restart کردن کانتینر یکی از این نودها، پیامی به صورت زیر خواهیم گرفت:

racnode2 login: 10-05-2022 20:44:24 +0330 : : Systemctl state is running! 10-05-2022 20:44:24 +0330 : : Setting correct permissions for /bin/ping 10-05-2022 20:44:24 +0330 : : Public IP is set to 10.0.20.152 10-05-2022 20:44:24 +0330 : : RAC Node PUBLIC Hostname is set to racnode2 10-05-2022 20:44:24 +0330 : : racnode2 already exists : 10.0.20.152 racnode2.example.com racnode2 192.168.10.152 racnode2-priv.example.com racnode2-priv 10.0.20.162 racnode2-vip.example.com racnode2-vip, no update required 10-05-2022 20:44:24 +0330 : : racnode2-priv already exists : 192.168.10.152 racnode2-priv.example.com priv, no update required 10-05-2022 20:44:24 +0330 : : racnode2-vip already exists : 10.0.20.162 racnode2-vip.example.com vip, no update required 10-05-2022 20:44:24 +0330 : : Preparing host line for racnode-scan 10-05-2022 20:44:24 +0330 : : Preapring Device list 10-05-2022 20:44:24 +0330 : : Changing Disk permission and ownership /dev/asm_disk1 10-05-2022 20:44:24 +0330 : : Changing Disk permission and ownership /dev/asm_disk2 10-05-2022 20:44:24 +0330 : : Preapring Dns Servers list 10-05-2022 20:44:24 +0330 : : Setting DNS Servers 10-05-2022 20:44:24 +0330 : : Adding nameserver 10.0.20.2 in /etc/resolv.conf. 10-05-2022 20:44:24 +0330 : : ##################################################################### 10-05-2022 20:44:24 +0330 : : RAC setup will begin in 2 minutes 10-05-2022 20:44:24 +0330 : : #################################################################### 10-05-2022 20:44:54 +0330 : : ################################################### 10-05-2022 20:44:54 +0330 : : Pre-Grid Setup steps completed 10-05-2022 20:44:54 +0330 : : ################################################### 10-05-2022 20:44:54 +0330 : : Checking if grid is already configured 10-05-2022 20:44:54 +0330 : : Grid is installed on racnode2. runOracle.sh will start the Grid service 10-05-2022 20:44:54 +0330 : : Setting up Grid Env for Grid Start 10-05-2022 20:44:54 +0330 : : ############################################################################# 10-05-2022 20:44:54 +0330 : : Grid is already installed on this container! Grid will be started by default 10-05-2022 20:44:54 +0330 : : ###############################################################################